I’ve been slowly working on upgrading the server that hosts this website (along with other things such as email, news reader, backups, etc). I started a hard for me to believe 3 years ago. Somewhere along the way I experienced some sort of issue with the ASUS motherboard and after a few attempts to get it fixed via the RMA process, I ended up moving to a Gigabyte based motherboard. I’ve even done a hard drive swap since I started the upgrade process.

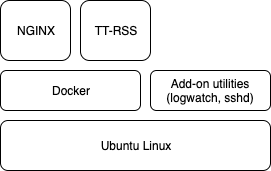

I started with a basic Linux install, layered on some useful system utilities and most importantly docker. Most of this is covered in the rebuild post. After that, I try to manage all of the “services” I want to run in containers. I’m cheating a bit and the containers are fairly monolithic, but this is fine for my needs as I’m primarily using containers as a way of managing the software versions vs. trying to build a scalable collection of micro-services.

When I initially started, I figured that I’d use the wordpress container. Now, as I run several wordpress based sites from lowtek, I was just going to run multiple containers and front it all with nginx to distribute the traffic, with of course nginx in a container too.

Along the way trying to get this setup to work, I learned a few valuable lessons about docker networking. The short version of this is if you want docker containers to be able to easily work with each other, create a docker user defined bridge network and make sure all of the containers you want to talk between each other are attached to that network. Now container A can talk to container B using the container name as the local DNS name and things just work.

WordPress generally doesn’t talk https, it’s really just http. This didn’t seem like a problem because nginx is pretty easy to setup with https and let’s encrypt to provide an always secure connection. Unfortunately since wordpress is running as a container itself, it doesn’t really know that it’s setup in a sub-directory nor that the correct URL for it is https. There are simple hacks you can do to the wp-config.php file to make this work, and I did finally succeed in getting all the bits happy. However, when I looked back at what I’d created – it didn’t make sense for the complexity I was dealing with. My nginx needed to reverse proxy to the wordpress containers. Each wordpress install needed extra magic to make it aware of where it was really running, and upgrades to wordpress were wonky because upgrading the container doesn’t really upgrade a running install (the files on disk are what matter).

So I bailed, and just installed wordpress into my nginx deployment. This was pretty straight forward. I added a MySQL container to host the data store for wordpress and everything fell together nicely. I just did a backup of the database on my old server, and an import on the new one.

Nginx makes it very easy to run a full https website. The linuxserver.io project has a well maintained image that includes let’s encrypt and everything I wanted. Unfortunately many links were to http:// – including images. Chrome, and I assume other browsers will follow, is starting to become more strict about mixed content pages. Also, Google search results tend to favour secured sites over non.

I found a good post that covered moving wordpress to a new URL, which is sort of what I want to do. I’m moving from http://lowtek.ca/roo to https://lowtek.ca/roo.

The key commands are:

|

1 2 3 4 5 |

UPDATE wp_posts SET guid = replace(guid, 'http://www.oldurl','http://www.newurl'); UPDATE wp_posts SET post_content = replace(post_content, 'http://www.oldurl', 'http://www.newurl'); UPDATE wp_postmeta SET meta_value = replace(meta_value,'http://www.oldurl','http://www.newurl'); |

This feels pretty scary, so two things. (1) I want to back up my database, and (2) I’d like to see what it is I’m about to replace.

Backup is actually really easy (this is why containers are cool).

|

1 |

docker exec CONTAINER /usr/bin/mysqldump -u root --password=root DATABASE > backup.sql |

To keep things a bit more brief, I’ll lay out the steps I took to success for the second part.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# get a shell in docker container docker exec -it mysql bash # run mysql cli mysql -u root --passsword # pick the right db use my_wp_db; # dump guid list - this will show you what you're changing select guid from wp_posts; # bravely run the replace update wp_posts set guid = replace(guid, 'http://lowtek.ca/', 'https://lowtek.ca/'); Query OK, 516 rows affected (0.01 sec) Rows matched: 1513 Changed: 516 Warnings: 0 # ^^ looks pretty good # # Turns out I had a bunch of www.lowtek.ca's as well update wp_posts set guid = replace(guid, 'http://www.lowtek.ca/', 'https://lowtek.ca/'); Query OK, 993 rows affected (0.02 sec) Rows matched: 1513 Changed: 993 Warnings: 0 |

This only took care of one set of the links. I needed to also do post_content in wp_posts and meta_value in wp_postmeta. Reviewing my blog postings, nearly all of the posts/pages are now fully https with no warnings from Chrome about mixed content.

There were a few standouts. A couple of Creative Commons images I’d linked to needed to get fixed to use https. During the trip down memory lane I found a few busted posts due to images I’d linked which were no longer hosted at that location. I also came across the sad fact that some of my friends who used to host stuff, have let those expire or have changed it significantly enough the information I was linking to doesn’t really exist anymore.

This got me thinking about the state of the web today, and the fact that social media sites have taken over the websites people used to maintain themselves. It’s great to be able to easily communicate with friends and others via things like Twitter, Facebook or Instagram but it’s also unfortunate that there is a lack of diversity in how people are expressing themselves. There is also a lot less control you have over the information once you give it to one of these platforms to host for you. Maybe we’ll see the pendulum swing back a little.