LineageOS recently pushed official 17.1 images for my phone, the Pixel XL (marlin). I’d been stalling a little bit in the upgrade as the only path is a manual and I was concerned I was going to lose all of my application state.

I finally took the plunge, in part I was keen to move to the new Android 10 features – and LineageOS has also stopped their 16.0 builds, and this means you won’t get new security patch levels.

It turns out that while you need to take some manual steps, the upgrade path was very smooth. Still, here are the steps I took:

(1) Backup

- I already make use of SimpleSSHD to support nightly SSH based backups.

- Export settings from various apps: k9mail, feedme, etc. that are not backed by cloud services.

- Run SMS Backup+ to archive all my SMS to my gmail account.

Usually I run TWRP recovery, but LineageOS has moved to their own recovery. Unfortunately the LineageOS recovery doesn’t support Nandroid backups. You can however still use adb backup.

To perform an adb backup, have the phone running as normal. Enable both developer options, and then allow adb (debugging) and adb root access. To do the backup we need to let adb connect, and have root access to access the files.

On your desktop machine:

|

|

$ adb root $ adb backup -apk -shared -all -f pixel-june-2020.ab |

Your phone will prompt you for confirmation of the backup process. Once it starts to run, it’ll take a while (mine was 8GB over USB2).

Additionally I manually copied the backup files (exported settings) I had made.

(2) Download the ROM

Grab the 17.1 marlin ROM from LineageOS. I also run the Google stuff, so also go to OpenGApps to get that. I initially picked ‘stock’ because I though, hey the Pixel is as Google a phone as you might get. The list of stock apps is pretty close to what I have installed anyways – despite the fact that my current gapps is the nano version.

Once I upgrade my phone to a later version of the hardware, I’ll probably stick with the Pixel line. LineageOS isn’t officially supported on the more recent hardware, but the delta between LineageOS and Google stock has become pretty slim too. I may finally give up on custom firmware and run stock, we’ll have to see.

[If you read on, you’ll note that I ended up falling back to the nano pico gapps build. Oh well]

(3) Check hashes of all downloads

Always check the hashes. I’ve personally had bad downloads. You don’t want a bad file to cause you additional grief, it’s easy to check.

(4) Upgrade

We can follow the LineageOS upgrade wiki to do the install.

My device did reboot to a blank screen, but once I started the next command – I got a visual progress display. It is possible I just didn’t wait long enough, but either way things started up just fine.

|

|

$ adb sideload lineage-17.1-20200605-nightly-marlin-signed.zip |

If you are as I am, intending to install the Google apps, then you want to avoid booting into normal mode.

On the phone in recovery once it has finished the sideload

- Click Advanced

- Reboot to Recovery

- Once recovery start again..

- Click Apply Update

- Apply from ADB

Now we sideload gapps:

|

|

$ adb sideload <gapp file> |

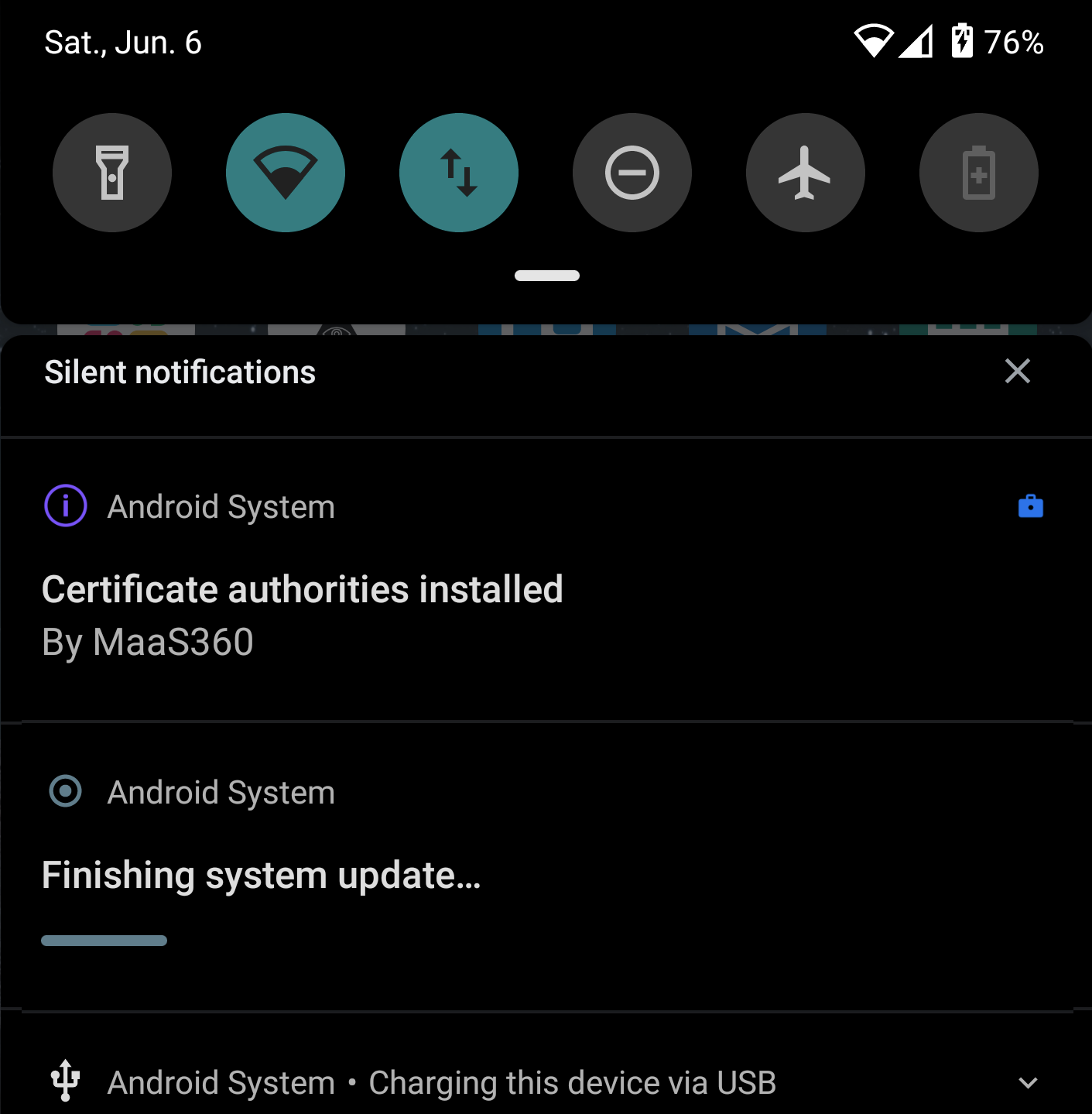

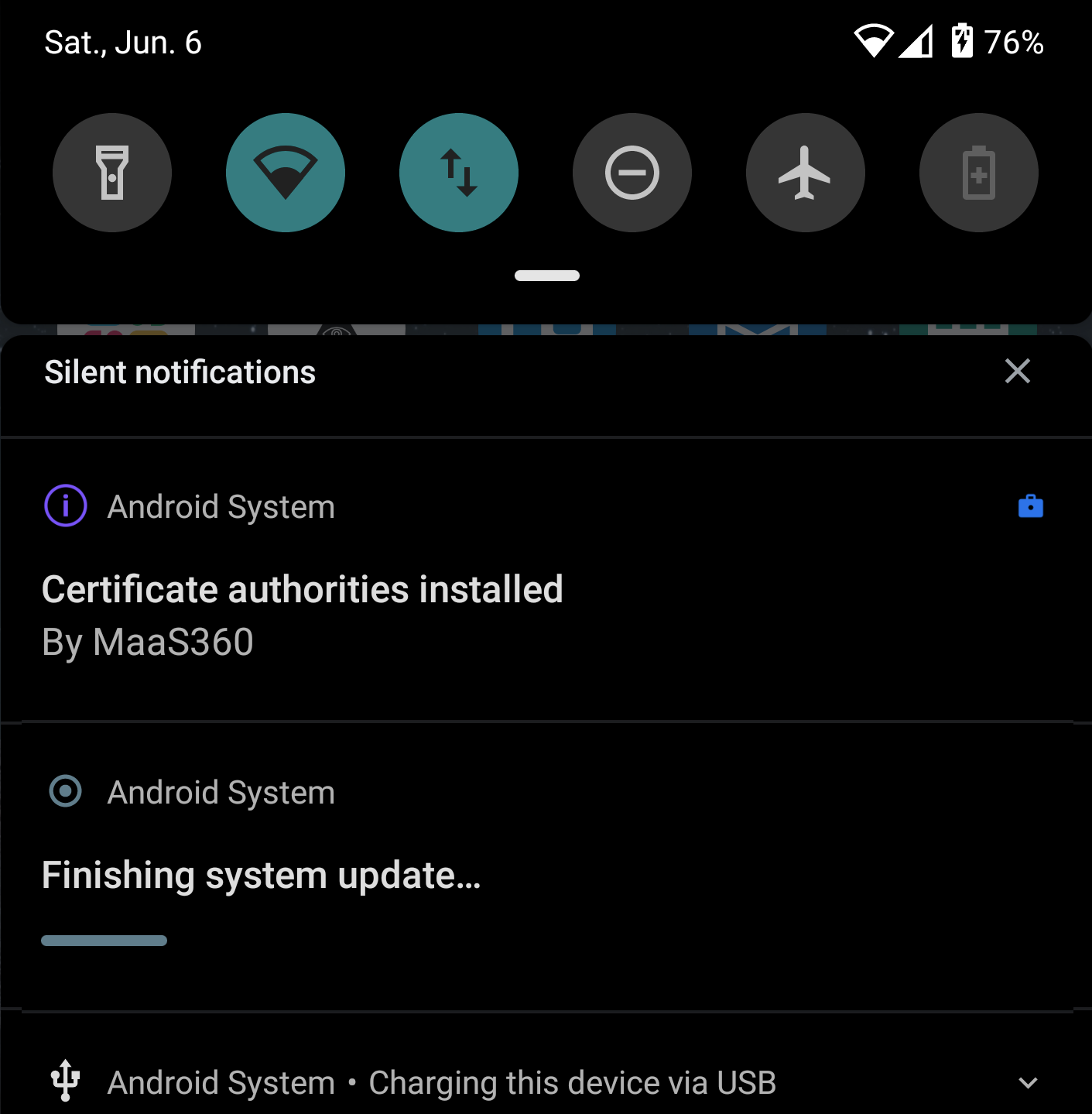

Note: It does appear that I got a new recovery as part of the lineage install.

Here is where I ran into trouble with the stock version of gapps. While the source file did match the md5sum, the phone install was giving me a signature verification failure – at 47%.

oh oh.. 1st try to install the (md5sum verified) gapps.. and I get a signature verification failure.. but at 47% progress.. hmm. It turns out that other people have had exactly this problem.

The 47% appears to be a side effect of the sideload process. I banged my head on this a few times, until I carefully read what was actually being reported on the phone screen when the verification failed.

Not enough system space to install the stock gapps. Sigh, how many times have I struggled with getting a computer to do something when the problem was that I didn’t carefully read the error message.

Downloading and installing the nano pico version worked fine, but my notes say that I still saw the signature verification failure (this may be due to the gapps approach to building the bundle).

(5) Reboot

And wait, and wait, and wait.. The first boot is always exciting and takes enough extra time you start to worry something is wrong.

And.. it’s alive! All my apps/data are still “ok”. This is unexpected, I had prepared myself to do a full rebuild of things, but it seems that this upgrade path allows my applications and their state to persist.

There were some minor configuration difference (launcher UI settings reset.. to 5×5 instead of 5×6 which I prefer). This was easy to fix and my layout was fully restored.

A bunch of permission checks popping up too, still overall a mostly painless upgrade to Android 10. Lots of settings persisted, like my mobile data per app preferences. Ringtones and sounds were busted, because the LineageOS sounds have been replaced by the Pixel ones.

(6) Epilogue

It’s been over a week, and no big surprises (which is good).

The whole Privacy stuff is great. I always liked Privacy Guard in LineageOS and now that similar function added to Android itself, I’m ok with letting that go. I’m a bit disappointed with the stock sounds available, I may just need to go add the old LineageOS sounds as user sounds so I can get the ones I’m used to.