It is a common misconception that DNS servers that your system uses are managed in a priority order. I had this misunderstanding for years, and I’ve seen many others with the same.

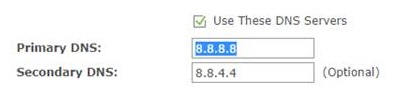

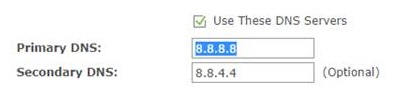

The problem comes from the router or OS setup where you can list a “Primary” and “Secondary” DNS server. This certainly gives you the impression that you have one that is ‘mostly used’ and a ‘backup one’ that is used if the first one is broken, or too slow. This is false, but confusingly also sometimes true.

Consider this stack exchange question/answer. Or this serverfault question. If you go searching there are many more questions on this topic.

Neither DNS resolver lists nor NS record sets are intrinsically ordered, so there is no “primary”. Clients are free to query whichever one they want in whichever order they want. For resolvers specifically, clients might default to using the servers in the same order as they were given to the client, but, as you’ve discovered, they also might not.

Let me also assure you from my personal experience, there is no guarantee of order. Some systems will always try the “Primary” first, then fall back to the “Secondary”. Others will round-robin queries. Some will detect a single failure and re-order the two servers for all future queries. Some devices (Amazon Fire Tablets) will magically use a hard coded DNS server if the configured ones are not working.

Things get even more confusing to understand because there is the behaviour of the individual clients (like your laptop or phone), and then the layers of DNS servers between you and the authoritative server. DNS is a core part of how the internet works, and there is lots of information on the different parts of DNS out there.

The naming “Primary” and “Secondary” come from the server side of DNS. When you are hosting a system and configure the domain name to IP mapping, you set up your DNS records in the “Primary” system. The “Secondary” system is usually an automated replica of that “Primary”. This really has nothing to do with what the client devices are going to do with those addresses.

Another pit-fall people run into when they think there is an ordering, is when they setup a pi-hole for ad-blocking. They will use their new pi-hole installation as the “Primary” and then use a popular public DNS server (like 8.8.8.8) as the “Secondary”. This configuration sort of works – at least some of the time, your client machine will hit your pi-hole and ad-blocking will work. Then, unpredictably it will not block an ad – because the client has used the “Secondary”.

Advice: Assume all DNS servers are the same and will return the same answer. There is no ordering.

I personally run two pi-hole installations. My “Primary” handles about 80% of the traffic, and the “Secondary” about 20%. This isn’t because 20% of the time my “Primary” is unavailable or too slow, but simply that about 20% of the client requests are deciding to use the “Secondary” for whatever reason (and that a large amount of my traffic comes from my Ubuntu server machine). Looking deeper at the two pi-hole dashboards, the mix of clients looks about the same, but the “Secondary” has fewer clients – it does seem fairly random.

If your ISP hands out IPv6 addresses, you may find that things get even more interesting as you’ll also have clients assigned an IPv6 DNS address, this adds yet another interface to the client device and another potential DNS server (or two) that may be used for name lookups.

Remember, it’s always DNS.