I like many have been, and continue to, work from home. You may have also heard of the chip shortage making things like laptops a bit more difficult to get your hands on, especially at the scale of a large company. This has delayed the usual upgrade cycle, and meant I was using a machine with no AppleCare warranty.

Up until recently I’ve been using a 2017 macbook pro – yes, the one with the bad butterfly key-switches – that is until recently.

Right from the start the keyboard had given me problems. In the first few weeks my W key was janky and needed extra presses to work. It sorted itself out after a little while and I discovered that if I was careful about dust/crumbs I could avoid problems. When problems happened, giving it a good shake upside-down would help remedy the issue.

In this case the F key started jamming, then broke off entirely. Normal typing would dislodge the key and was generally a pain. Apparently once one key busts off, others are not far behind. This was proved out by a coworker of mine in the same situation, but with 2 busted keys. I guess it was time for a replacement.

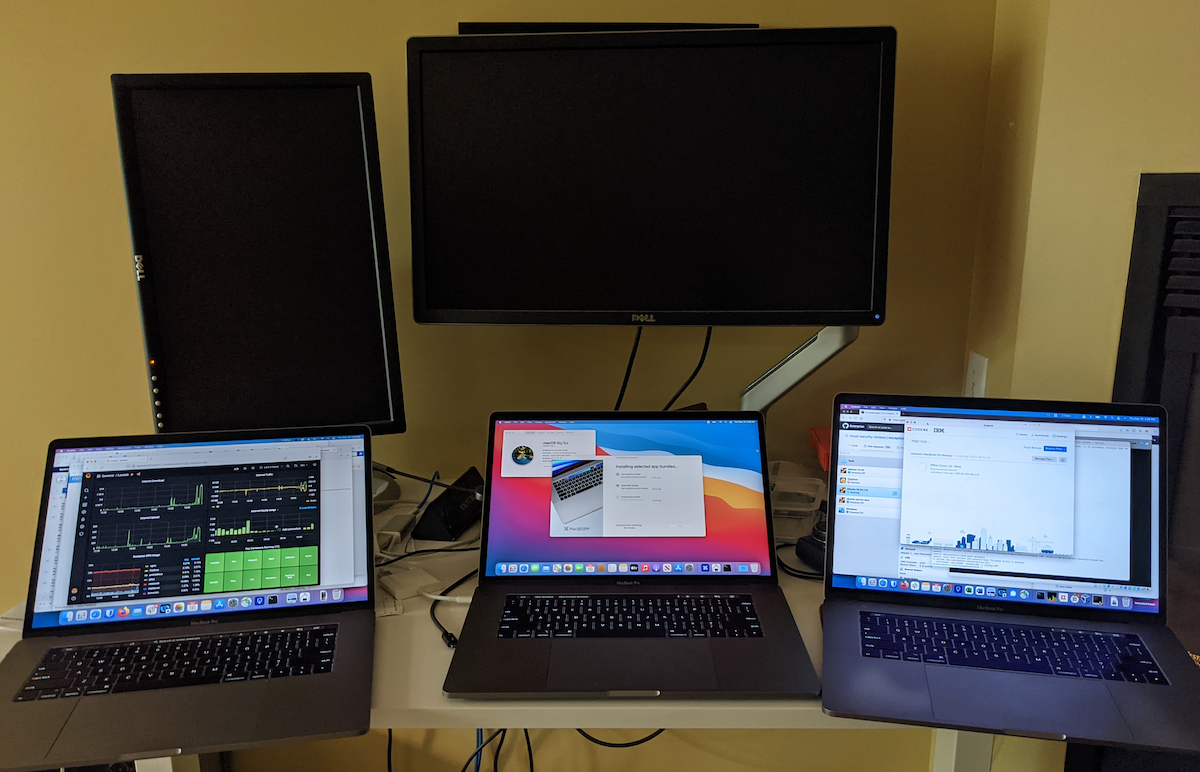

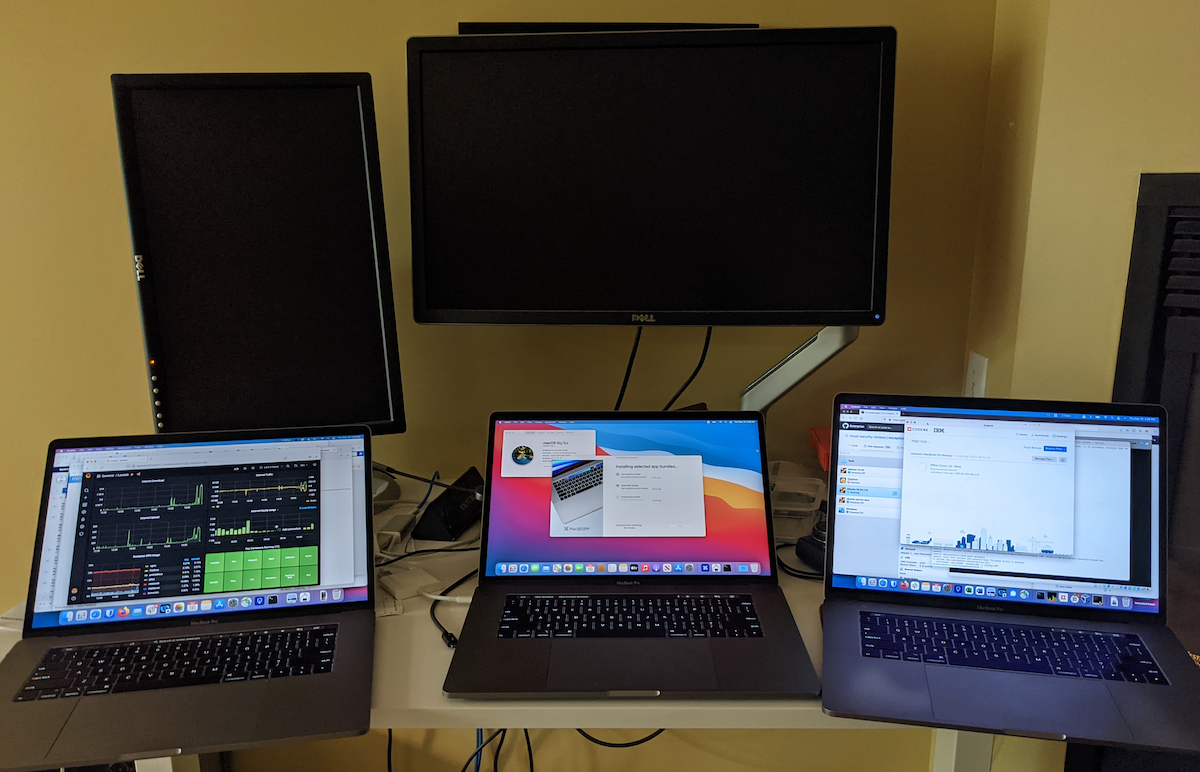

After the usual paperwork, I was back in business – sort of, as the first replacement had a busted microphone and that makes participating in emeetings sort of tough. The second replacement arrived, this was a 2019 with a bit of warranty left and everything works. So now you know why the lead picture has 3 macbooks in it.

The first replacement was a 2018 machine, a little faster than the 2017 but basically on par. I won’t mention much about this because I only had that a day give or take. This is why the post is a tale of 2 macbooks and not 3.

The 2017 was a great machine aside from the keyboard and the cursed touchbar. I don’t regret giving up my previous pre-touchbar macbook pro, because the 2017 was pretty slick and had USB-C charging.

The battery data from the 2017 does tell a longevity story

|

|

Battery Information: Firmware Version: 702 Cell Revision: 3925 Full Charge Capacity (mAh): 5238 State of Charge (%): 71 Health Information: Cycle Count: 781 Condition: Normal |

It had reasonable performance up to the day I stopped using it. Not bad for a 4+ year old machine. The Geekbench score was 867 single-core, 3363 multi-core. I also really liked the stickers I’d accumulated over time.

The 2019, while a previously used machine it has a noticeably better keyboard. The keys feel a bit more muted, and seem to have a little more travel. It’s sticker free still, just a boring space grey slab.

This was still a nice upgrade. Intel i7 -> i9. 4-core -> 8-core. Faster memory 2133 -> 2400. The Geekbench numbers are nicer too: 1059 single-core, 6074 multi-core, a pretty big numbers jump. It does seem a little faster but you get used to the modest speed increase pretty quickly.

Let’s look at the battery stats

|

|

Battery Information: Firmware Version: 901 Cell Revision: 1734 Full Charge Capacity (mAh): 5952 State of Charge (%): 79 Health Information: Cycle Count: 168 Condition: Normal |

There is a nice bump in Full Charge Capacity (+714 mAh).. but things get pretty mysterious when we talk about batteries. It seems the 2017 design capacity was 6669 mAh, and the 2019 design capacity was 8790 mAh. I’m sure the cycle count factors in here (781 vs 168), as well as many other variables such as charge rates etc.

While I was sad to see the well stickered and travelled laptop go, having a fully working keyboard is a joy you quickly take for granted. I’m still looking forward to a real hardware upgrade to a non-touchbar machine, maybe with the M1X or whatever comes after it. Oh, and 32GB of RAM would be very nice.