Most of us have a multitude of online systems we connect to, some on a regular basis and others from time to time. Each of these systems usually has a unique user ID and password. How many of these can we reasonably remember? Many, many people attempt to keep at least the passwords aligned across systems and often user name as well. The risk is that one of these systems is compromised (or worse, malicious) and suddenly someone other than you has the keys to the castle.

Most of us have a multitude of online systems we connect to, some on a regular basis and others from time to time. Each of these systems usually has a unique user ID and password. How many of these can we reasonably remember? Many, many people attempt to keep at least the passwords aligned across systems and often user name as well. The risk is that one of these systems is compromised (or worse, malicious) and suddenly someone other than you has the keys to the castle.

There is also the challenge of picking a strong password. There are several online password generators, and tools that help verify the strength of a password. For a while I was relying on my browser to remember my password (via a cookie) and using randomly generated passwords, when the cookie expired I’d use the “I lost my password” feature to fix it (or just recreate a user). This works ok for throw-away sites (web forums) but really sucks for a site like PayPal.

So I was guilty of password re-use across a number of sites. No longer now that I’ve moved to KeePass. Of course, now I need to ensure that I have one password (for KeePass) that I will not forget and is secure enough. To help in creating one I’ll reference a great article on the usability of passwords, I encourage everyone to read this.

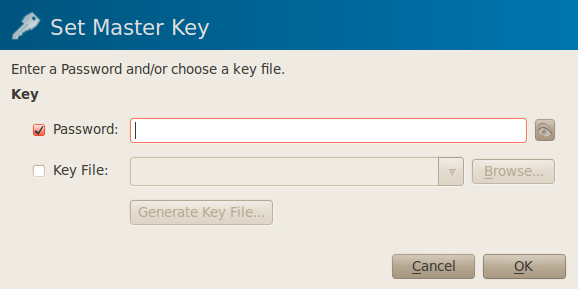

You can get versions of KeePass for almost any platform. For my needs I needed to cover Windows, Linux and Android. On first launch you’ll be asked to create a database (to store passwords) and assign it a password and/or a keyfile.

From the documentation “Key files provide better security than master passwords in most cases. You only have to carry the key file with you, for example on a floppy disk, USB stick, or you can burn it onto a CD. Of course, you shouldn’t lose this disk then.” This is a neat option, but not very practical on my NexusOne.

Once you’ve created your .kdb database on one of your systems, in my case an Ubuntu box – you can move that DB to other systems you’ve got KeePass on. The Android app KeePassDroid happily consumed the (1.x) database created by KeePassX. You need to figure out a method for synchronizing that database across your systems, some people use dropbox or just simply scp.

By using KeePass it is easy to create strong passwords (a generator is built into the app) and track your unique user id and password for all sites you access. Giving yourself a rule that says you’ll switch to a KeePass based password on your next visit to the site will help you move over relatively quickly.

If you need any more encouragement to rethink how you handle passwords and account, just do a quick google search. Better to lose control of one account than all.