Mirrored drives are also known as a RAID 1 configuration. It is important to note that running mirrored drives should not be used as a substitute for doing backups. My motivation for running a RAID 1 is simply that with the drive densities today, I expect these drives to fail. A terabyte unit is cheap enough that multiplying the cost by two isn’t a big deal, and it gives my data a better chance of surviving a hardware failure.

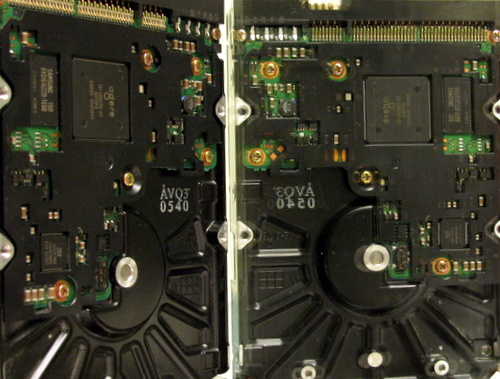

I purchased two identical drives several months apart – in the hopes of getting units from different batches. I even put them into use staggered by a few months as well. The intent here was to try to avoid simultaneous failure of the drives due to similarities in manufacture date / usage. In the end, the environment they are in is probably a bigger factor in leading to failure but what can you do?

Linux has reasonable software raid support. There is a debate of the merits of software raid vs. hardware raid, as well as which level of raid is most useful. I leave this as an exercise up to the reader. The remainder of this posting will be the details of setting up a raid 1 on a live system. I found two forum postings that talked about this process, the latter being most applicable.

We will start with the assumption that you do have the drive physically installed into your system. The first step is to partition the disk. I prefer using cfdisk, but fdisk will work too. This is always a little scary, but if this is a brand new drive it should not have an existing partition table. In my scenario I wanted to split the 1TB volume into two partitions, a 300Gb and a 700Gb.

Now let’s use fdisk to dump the results of our partitioning work:

$ sudo fdisk -l /dev/sdd

Disk /dev/sdd: 1000.2 GB, 1000204886016 bytes

255 heads, 63 sectors/track, 121601 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Disk identifier: 0x00000000

Device Boot Start End Blocks Id System

/dev/sdd1 1 36473 292969341 83 Linux

/dev/sdd2 36474 121601 683790660 83 Linux

Next we need to install the RAID tools if you don’t have them already:

$ sudo apt-get install mdadm initramfs-tools

Now recall that we are doing this in a live system, I’ve already got another 1TB volume (/dev/sda) partitioned and full of data I want to keep. So we’re going to create the RAID array in a degraded state, this is the reason for the use of the ‘missing’ option. As I have two partitions I need to run the create command twice, once for each of them.

$ sudo mdadm --create --verbose /dev/md0 --level=mirror --raid-devices=2 missing /dev/sdd1

$ sudo mdadm --create --verbose /dev/md1 --level=mirror --raid-devices=2 missing /dev/sdd2

Now we can take a look at /proc/mdstat to see how things look:

$ cat /proc/mdstat

Personalities : [raid1]

md1 : active raid1 sdd2[1]

683790592 blocks [2/1] [_U]

md0 : active raid1 sdd1[1]

292969216 blocks [2/1] [_U]

unused devices: <none>

Now we format the new volumes. I’m using ext3 filesystems, feel free to choose your favorite.

$ sudo mkfs -t ext3 /dev/md0

$ sudo mkfs -t ext3 /dev/md1

Mount the newly formatted partitions and copy data to it from the existing drive. I used rsync to perform this as it is an easy way to maintain permissions, and as I’m working on a live system I can re-do the rsync later to grab any updated files before I do the actual switch over.

$ sudo mount /dev/md0 /mntpoint

$ sudo rsync -av /source/path /mntpoint

Once the data is moved, and you need to make the new copy of the data on the new degraded mirror volume the live one. Now unmount the original 1TB drive. Assuming things look ok on your system (no lost data) now we partition that drive we just unmounted (double and triple check the device names!) and format those new partitions.

All that is left to do is add the new volume(s) to the array:

$ sudo mdadm /dev/md0 --add /dev/sda1

$ sudo mdadm /dev/md1 --add /dev/sda2

Again we can check /proc/mdstat to see the status of the array. Or use the watch command on the same file to monitor the progress.

$ cat /proc/mdstat

Personalities : [raid1]

md1 : active raid1 sdd2[1]

683790592 blocks [2/1] [_U]

md0 : active raid1 sda1[2] sdd1[1]

292969216 blocks [2/1] [_U] [>....................] recovery = 0.6% (1829440/292969216) finish=74.2min speed=65337K/sec

unused devices: <none>

That’s all there is to it. Things get a bit more complex if you are working on your root volume, but in my case I was simply mirroring one of my data volumes.

Thanks for the article, Roo. Just as another idea, I keep a mirror as well, but I only update it nightly with a cron job. I use rsync-backup which not only does a backup, but also lets me do point-in-time rollbacks. The downside, of course, is that I can be as much as 24 hrs out of date on my backup. The advantages, however, are that the backup drive sees far less use than the main drive; I have incremental timed backups; and an accidental delete or overwrite of a file does not immediately hit the backup drive, so I can use it for a recover.

The nightly mirror is another good strategy, especially if you have it automated.

My primary backup story is based on rsync as well. I’m using rsnapshot which makes incremental backups a snap. See my write up on that here http://www.lowtek.ca/roo/2009/time-machine-and-linux/

I haven’t quite gotten to the point of doing offsite backups, but that’s on my to-do list.