Recently it seems email spam levels have been increasing, reading through the news it seems there is some debate if it is really up or not. Either way, I know that my local mailbox has been getting more spam (specifically more binary attachment spam) lately. As I host my own email server, addressing spam levels is something I can do something about at the server level vs. needing to rely on clever filtering by the client.

Recently it seems email spam levels have been increasing, reading through the news it seems there is some debate if it is really up or not. Either way, I know that my local mailbox has been getting more spam (specifically more binary attachment spam) lately. As I host my own email server, addressing spam levels is something I can do something about at the server level vs. needing to rely on clever filtering by the client.

Hosting your own email server is a little dumb. I like the challenge, and knowing my data is stored on my computer systems is comforting. Most people should just stick with gmail or similar. If you are stubborn like myself, Ubuntu makes it easy to setup your own mail server. There is of course the other details on getting a ISP that allows you to host a server etc. (exercise left to the reader)

One alternative is to run a local mail server that smarthosts through your ISP (or even Google). It can use fetchmail or similar to suck down email from your various accounts too. This would result in your data on your machines and good spam filtering as you offload that problem to the other mail server (say gmail).

Ok, so you’re dumb like me and while spamassassin is doing an ok job, it’d be nice to stop more spam from hitting your mail server. The solution is greylisting, the Ubuntu community docs make it very simple to setup if you’ve got a postfix based mail system setup already.

The concept behind greylisting is very simple. Spammers are lazy and so is spam software. One of the error codes a mail server can answer back is ‘temporary failure’. Greylisting causes the first attempt to deliver a given email message with a temporary failure code, any properly configured mail server will retry after a short period of time (usually minutes). Spam software can’t be bothered to go back, it’s spraying email across a large number of servers and a large number of addresses – a few failures aren’t important. If you want to know more, I encourage you to read through the whitepaper.

The trade off with greylisting is that normal email can be delayed. Postgrey uses an adaptive whitelist to allow frequent valid email to skip the temporary fail sequence. The place you’ll notice delays is when you reset a password, since the email is likely to come from a mail server you don’t often get email from – so it will be delayed by the temporary fail code.

After a day – spam has dropped to zero, and email is still arriving in my inbox. I did have to “wait” for a password reset email that was delayed by 1041 seconds (a bit more than 17mins), the delay time is due to the sending server retry cadence.

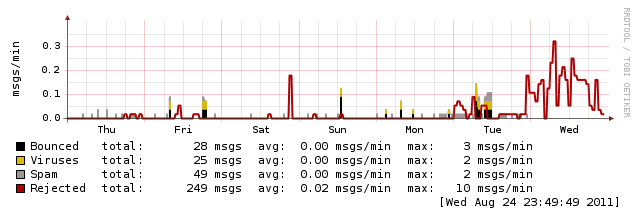

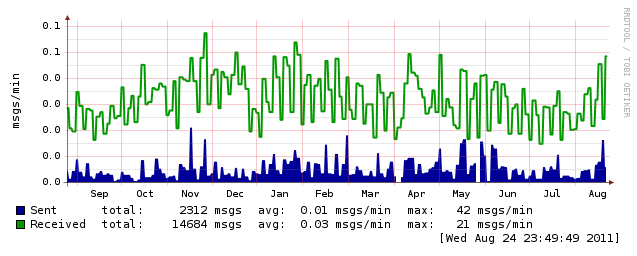

Looking at a year of mail traffic on my server, it doesn’t appear that volumes are up that much.

Looking at the weekly graph shows a spike in rejects (due to greylisting), but if you look closely you can see the drop off on viruses and spam (since greylisting prevents those messages from ever being received and processed).

Looking at the weekly graph shows a spike in rejects (due to greylisting), but if you look closely you can see the drop off on viruses and spam (since greylisting prevents those messages from ever being received and processed).