I’m all in on Android. I actually like Apple products just fine too, I’m composing this post on a M1 Macbook Pro. In the past I’ve toyed with lots of Apple hardware, like the 2nd generation iPod Touch. When Google released the G1 I was hooked, a phone with a keyboard? It’s like a tiny computer in your pocket that can also make phone calls. Since then I’ve been through a lot of Android devices, both phones and tablets.

I’m all in on Android. I actually like Apple products just fine too, I’m composing this post on a M1 Macbook Pro. In the past I’ve toyed with lots of Apple hardware, like the 2nd generation iPod Touch. When Google released the G1 I was hooked, a phone with a keyboard? It’s like a tiny computer in your pocket that can also make phone calls. Since then I’ve been through a lot of Android devices, both phones and tablets.

Privacy is also important to me, and Signal is a great match for my messaging needs. It has always bothered me that while you can get a very nice desktop experience linking your “primary device” (aka your phone) to your laptop, it wasn’t really possible to run Signal on an Android tablet as a linked device. The folks at Signal enabled the iPad as a linked device, but no love for Android tablets yet.

Recently I came across a solution. Molly.im. This allows my tablet to run a version of the Signal client (Molly) and be a linked device. While I almost never am far from my phone, sometimes I’m doing something on my tablet and switching devices is a pain. I also use the Note to Self to move data between devices (links, photos, files).

Molly is a fork of the Signal client code for Android. From a security point of view, it’s using the same Signal protocol – so your data is encrypted end to end. You do have to decide to ‘trust’ that the Molly code hasn’t been compromised in some way and will leak your data. This ‘trust’ is the same trust you are giving the folks that work on the Signal client code (or the desktop application). While it is a little uncomfortable to trust yet another group of people developing some code, we do this all the time with all of the apps we run on our devices. For me, this small risk is well worth the utility of having a linked Signal client on my tablet.

Avoid Device Linking

While it may be tempting to link your Signal account to your desktop device for convenience, keep in mind that this extends your trust to an additional and potentially less secure operating system.

If your threat model calls for it, avoid linking your Signal account to a desktop device to reduce your attack surface.

The good news for me, is my threat model doesn’t cause me to be concerned about having my devices linked and spreading my private communication across multiple devices that I own. Still, this is a decision everyone should think through.

Getting setup with Molly is very easy. You start by installing F-Droid, an alternative app store for Android. This is an apk download and install, you’ll likely need to approve/enable the installation of ‘side-loaded’ content on your device.

Once you have F-Droid installed, open the app. Let it do the first time setup where it will update the various repositories. This process will probably prompt you for some additional permissions, you’ll probably want to permit them as you do want this new ‘app store’ to install more apps, and alert you when there are updates. It’s always good to pause and think about the permissions being asked for, but F-Droid is a well known application.

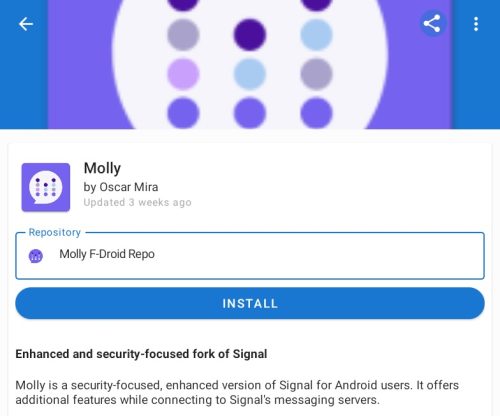

Now we need to configure the Molly application repository. While F-Droid comes with a built in ‘store’ of content, it also supports adding additional content sources. Go to the Molly webpage, and click on the Molly F-Droid repository. This will configure F-Droid so that it can see the Molly application. There are two versions of Molly, the FOSS one removes some of the Google integration and may be less compatible with the original Signal app – let’s pick the non-FOSS version.

At this point, it should be just like installing any application – but instead of using the Google Play store, you’re going to use F-Droid to install Molly.

Molly can act as a primary Signal installation, or as a linked device. Assuming you were able to install Molly on your device, let’s walk through the simple steps to get you linked to your existing Signal account.

Molly can act as a primary Signal installation, or as a linked device. Assuming you were able to install Molly on your device, let’s walk through the simple steps to get you linked to your existing Signal account.

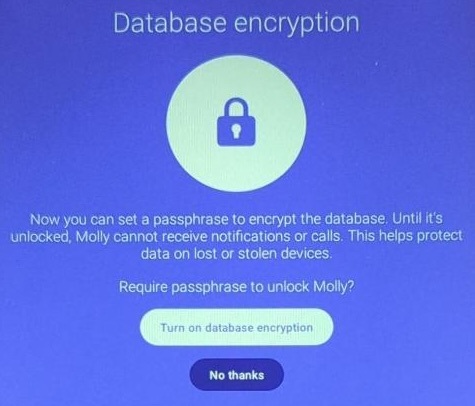

When you launch Molly for the first time you will be prompted to choose additional database encryption. This is a security trade off, being asked each time to unlock the database may be annoying, but it will give you better security if your device is compromised.

Next we see the normal Signal launch screen.

Next we see the normal Signal launch screen.

We can just hit “Continue” here to move to the next screen.

We can just hit “Continue” here to move to the next screen.

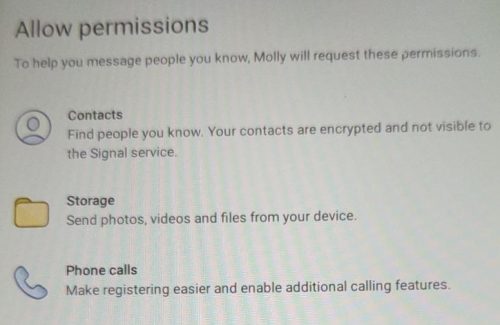

This is where you can choose how many Android capabilities you want to grant the Molly app. I’ll leave this up to personal choice, I didn’t give it permission to my Contacts, but granted the others. Both Signal and Molly are good about using very limited permissions.

This is where you can choose how many Android capabilities you want to grant the Molly app. I’ll leave this up to personal choice, I didn’t give it permission to my Contacts, but granted the others. Both Signal and Molly are good about using very limited permissions.

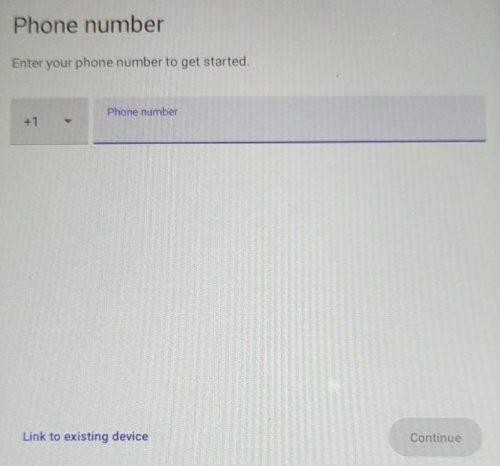

Next is the registration screen. While we could set this device up as a primary Signal device and link a phone number, we don’t want to do that in this case. Do not enter a phone number here. The “Link to existing device” option in the lower left is what we want to do. This will make this device act just like the ‘desktop’ version of Signal.

Next is the registration screen. While we could set this device up as a primary Signal device and link a phone number, we don’t want to do that in this case. Do not enter a phone number here. The “Link to existing device” option in the lower left is what we want to do. This will make this device act just like the ‘desktop’ version of Signal.

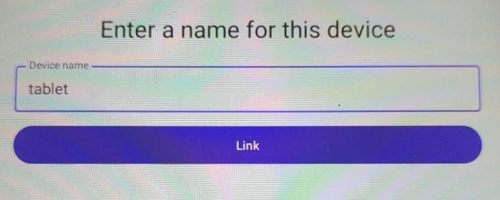

Here we get to give this device a name. Pressing the “Link” button will display a QR-Code we can scan from our primary device and connect the two. The Signal documentation talks about linked devices, but with Molly we bypass the limitation of multiple mobile devices.

Here we get to give this device a name. Pressing the “Link” button will display a QR-Code we can scan from our primary device and connect the two. The Signal documentation talks about linked devices, but with Molly we bypass the limitation of multiple mobile devices.

That’s it, now enjoy Signal on your tablet via Molly.