DIUN – Docker Image Update Notifier. I was very glad to come across this particular tool as it helped solve a problem I had, one that I felt strongly enough about that I’d put a bunch of time into creating something similar.

DIUN – Docker Image Update Notifier. I was very glad to come across this particular tool as it helped solve a problem I had, one that I felt strongly enough about that I’d put a bunch of time into creating something similar.

My approach, was to build some scripting to determine the signature of the image that I had deployed locally, and then make many queries to the registry to determine what (if any) changes were in the remote image. This immediately ran into some of the API limits on dockerhub. There were also other challenges with doing what I wanted. The digest information you get with docker pull doesn’t match the digest information available on dockerhub. I did fine this useful blog post (and script) that solves a similar problem, but also hits some of the same API limitations. It seemed like maybe a combination of web scraping plus API calls could get a working solution, but it was starting to be a hard problem.

DIUN uses a very different approach. It starts by figuring out what images you want to scan – the simplest way to do this is to allow it to look at all running docker containers on your system. With this list of images, it can then query the docker image repository for the tag of that image. On the first run, it just saves this value away in a local data store. Every future run, it compares the tag it fetched to the one in the local data store – if there is a difference, it notifies you.

In practice, this works to let you know every time a new image is available. It doesn’t know if you’ve updated your local image or not, nor does it tell you what changed in the image – only that there is a newer version. Still, this turns out to be quite useful especially when combined with slack notifications.

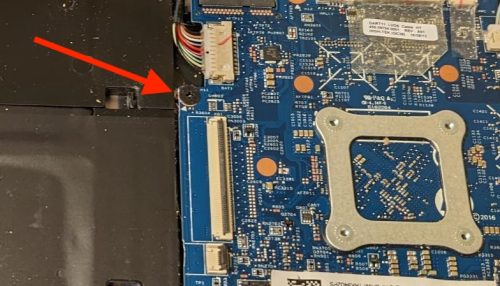

Setting up DIUN for my system was very easy. Here is the completed Makefile based on my managing docker container with make post.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

# # DIUN - Docker Image Update Notifier # https://crazymax.dev/diun/ # NAME = diun REPO = ghcr.io/crazy-max/diun # ROOT_DIR:=$(shell dirname $(realpath $(lastword $(MAKEFILE_LIST)))) # Create the container build: docker create \ --name=$(NAME) \ --hostname=diun \ -v $(ROOT_DIR)/data:/data \ -v /var/run/docker.sock:/var/run/docker.sock \ -e TZ=America/Toronto \ -e "DIUN_WATCH_SCHEDULE=0 */6 * * *" \ -e "DIUN_PROVIDERS_DOCKER=true" \ -e "DIUN_PROVIDERS_FILE_FILENAME=/data/config.yml" \ -e "DIUN_PROVIDERS_DOCKER_WATCHBYDEFAULT=true" \ -e "DIUN_NOTIF_SLACK_WEBHOOKURL=https://hooks.slack.com/services/XXXXX/XXXXXX/XXXXXX" \ --restart=unless-stopped \ $(REPO) # Start the container start: docker start $(NAME) # Update the container update: docker pull $(REPO) - docker rm $(NAME)-old docker rename $(NAME) $(NAME)-old make build docker stop $(NAME)-old make start |

I started very simply at first following the installation documentation provided. I used a mostly environment variable approach to configuring things as well. The three variables I need to get started were:

DIUN_WATCH_SCHEDULE– enable cron like behaviourDIUN_PROVIDERS_DOCKER– watch all running docker containersDIUN_PROVIDERS_DOCKER_WATCHBYDEFAULT– watch all by default

Looking at the start-up logs for the diun container is quite informative and generally useful error messages are emitted if you have a configuration problem.

I later added the:

DIUN_NOTIF_SLACK_WEBHOOKURL

in order to get slack based notifications. There is a little bit of setup you need to do with your slack workspace to enable slack webhooks to work, but it is quite handy for me to have a notification in a private channel to let me know that I should go pull down a new container.

Finally I added a configuration file ./data/config.yml to capture additional docker images which are used as base images for some locally built Dockerfiles. This will alert me when the base image I’m using gets an update and will remind me to go re-build any containers that depend on them. This use the environment varible:

DIUN_PROVIDERS_FILE_FILENAME

My configuration file looks like:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# duin config # points to docker images we use in locally build containers # # used by project alpha - name: docker.io/python:alpine # used by project beta - name: docker.io/python:bullseye # used by ted5000 exporter - name: docker.io/python:2.7-alpine # used by restricted shell - name: docker.io/alpine |

I’ve actually been running with this for a couple of weeks now. I really like the linuxserver.io project and recommend images built by them. They have a regular build schedule, so you’ll see (generally) weekly updates for those images. I have nearly 30 different containers running, and it’s interesting to see which ones are updated regularly and which seem to be more static (dormant).

Some people make use of Watchtower to manage their container updates. I tend to subscribe to the philosophy that this is not a great idea for a ‘production’ system, at least some subset of the linuxserver.io folks agree with this as well. I like to have hands on keyboard when I do an update, so I can make sure that I’m around to deal with any problems that may happen.