Recently I discovered that the iPhoto data was actually stuffed under a deleted user that existed as part of the Mac migration process, this meant it wasn’t being seen by my rsnapshot backup of the active user directory. Fixing the location of the iPhoto library was relatively easy to do, but having an extra 130GB of data to back up immediately ran me into storage problems.

Recently I discovered that the iPhoto data was actually stuffed under a deleted user that existed as part of the Mac migration process, this meant it wasn’t being seen by my rsnapshot backup of the active user directory. Fixing the location of the iPhoto library was relatively easy to do, but having an extra 130GB of data to back up immediately ran me into storage problems.

I had setup a RAID1 system using two 1TB volumes, I had decided to split the 1TB mirrored volume into 300Gb/700Gb so I could limit the space used by backups to 300Gb. In hindsight this was a silly idea, and it also made the migration process more complicated. If I had placed the 300Gb volume second, it might have been feasible to move that data somewhere then expand the 700Gb volume to fill the remainder of the drive – but I had put the 300Gb volume first. One day someone will write the utility to allow you to shift the start of a volume to the left (towards the start of a drive).

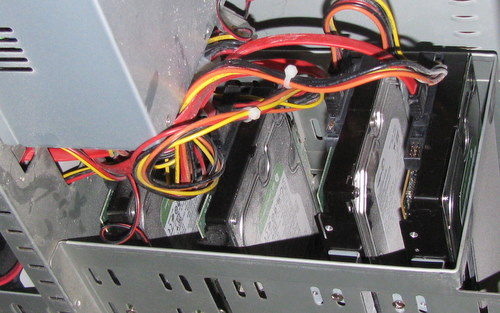

Instead of sticking with a RAID1 setup, I decided to move to RAID5. While there is a little less redundancy with RAID5, the additional flexibility seems like a good trade off to me at this point. I’ll avoid getting into the religious debate over which type of RAID you should use, or if RAID makes sense at all with large sized drives. Also there are some good off the shelf solutions now such as Drobo or QNAP.

With a project like this it is a good idea to make a plan in advance, then log your steps as you go along. Migration of 100’s of Gb of data will take time, lots of time. I did the work over about 5 days, some of it while on a trip outside the country (remote access!). Here was my plan:

- install new drive – ensure system is happy

- break mirrored set – run in degraded mode

- repartition new drive & unused mirrored drive

- create degraded raid 5 (2 drives only)

- copy data from degraded mirror onto degraded raid5

- decommission degraded mirror & repartition

- add volume to raid5 set

I also was careful to check that the new volume had the same capacity as the other two having been bit by that in the past. (I used fdisk -l /dev/sde to get the stats of the drive)

Step1: In general with Ubuntu it is a good idea to assign drives by the UUID value instead of the device name. Physical reconfiguration of the system (such as adding a new drive) can cause the device names to change. It turns out that mdadm uses UUID values by default, and my boot drive is UUID mapped – so while there was a reshuffling of the device names installing the new drive has zero impact on my running system.

Step2: Breaking the mirror. I do suggest you gather the details of the array and the device names using “sudo mdadm -D /dev/md1”, we will be talking about device names soon enough and you want that information logged. To break the mirror we first fail a device, then remove it.

sudo mdadm /dev/md1 --fail /dev/sde1

sudo mdadm /dev/md1 --remove /dev/sde1

Step3: We now prepare the new drive, and the failed mirror drive to be new volumes ready for the RAID5 array. I did this using cfdisk which I prefer, but use whatever you want. Make sure you have the device names correct! I’m always a little scared when repartitioning and so should you be.

Step4: Create a new three volume RAID5 array with the 2 drives plus a missing drive.

sudo mdadm --create --verbose /dev/md3 --level=5 --raid-devices=3 /dev/sda1 missing /dev/sde1

Looking at /proc/mdstat should show you the new array in degraded state:

cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

md3 : active raid5 sde1[2] sda1[0]

1953519872 blocks level 5, 64k chunk, algorithm 2 [3/2] [U_U]

Step5: Copy from the degraded mirror to the new RAID5. This is going to take a long time. You can choose to use one of two commands to accomplish the copy:

sudo rsync -aH /source/path /mntpoint

or

cp -a /source/path /mntpoint

The benefit of rsync is it can be resumed, or re-run to grab any recent deltas without having to copy everything again. One downside to rsync is it will use a lot of memory if you have a lot of files. I ended up using a mix of both over the approximately 3 days that I copied files to the new array.

Step6: Decommission the remaining (non)mirrored volume. Before you remove the array you’ll want to switch the mount points so your system goes from using the mirror as your data volume to the new RAID5 array. To remove a RAID array we stop, then remove it.

mdadm --stop /dev/md1

mdadm --remove /dev/md1

This should free up the device and you can now repartition it (if needed).

Step7: Add the volume to the RAID5 set and we’re done.

mdadm --add /dev/md3 /dev/sdb1

You can use watch cat /proc/mdstat to watch the progress of the ‘recovery’ of your RAID5 array. This too will take a while.

There are certainly more elegant ways to get from RAID1 to RAID5, I can think of at least one where I could have avoided at least some of the copy step. It is always best to work from a plan for multi-day, multi-step operations like this, and stick with the plan you come up with initially. Simple solutions are elegant too, even if they are a bit of brute force.

One thought on “How To: Migrate from Raid1 to Raid5”