It’s been 20 years I’ve had the domain lowtek.ca. Over that time I’ve had a few different ways to host the domain and the DNS records which point that domain the the IP address.

For a long time I had a static IP, this allowed me to set my DNS record and forget it. It was even, depending on my ISP, possible for me to have the reverse lookup work too. Paying a bit extra for a static IP was well worth it with a DSL connection, as you frequently got a new address. When I moved to cable, a static IP wasn’t an option, but the IP also rarely changes (once every year or two).

Back in the early days, domain registrars would charge for their DNS services or simply didn’t have one – this pushed me to look for free alternatives. I’ve used a few solutions, the most recent being some systems my brother maintains and I’ve got root access on, allowing me to modify the bind configuration files.

Recently I realized that rebel.ca would allow me to manage my DNS records, and it’s free.

Once logged into rebel.ca you can go to manage your domains. For a given domain you can then get to the DNS tab. On that tab under “Nameserver Information” you can pick one of three options:

- Park with Rebel.ca

- Forward Domain

- Use Third Party Hosting

Until very recently I was using the Third Party Hosting option and pointing at the DNS servers managed by my brother. This worked well, but was another place to log into and I would have to re-learn the bind rules for editing my records because I rarely did any updates. With rebel.ca managing it, I get an easy web UI to change things.

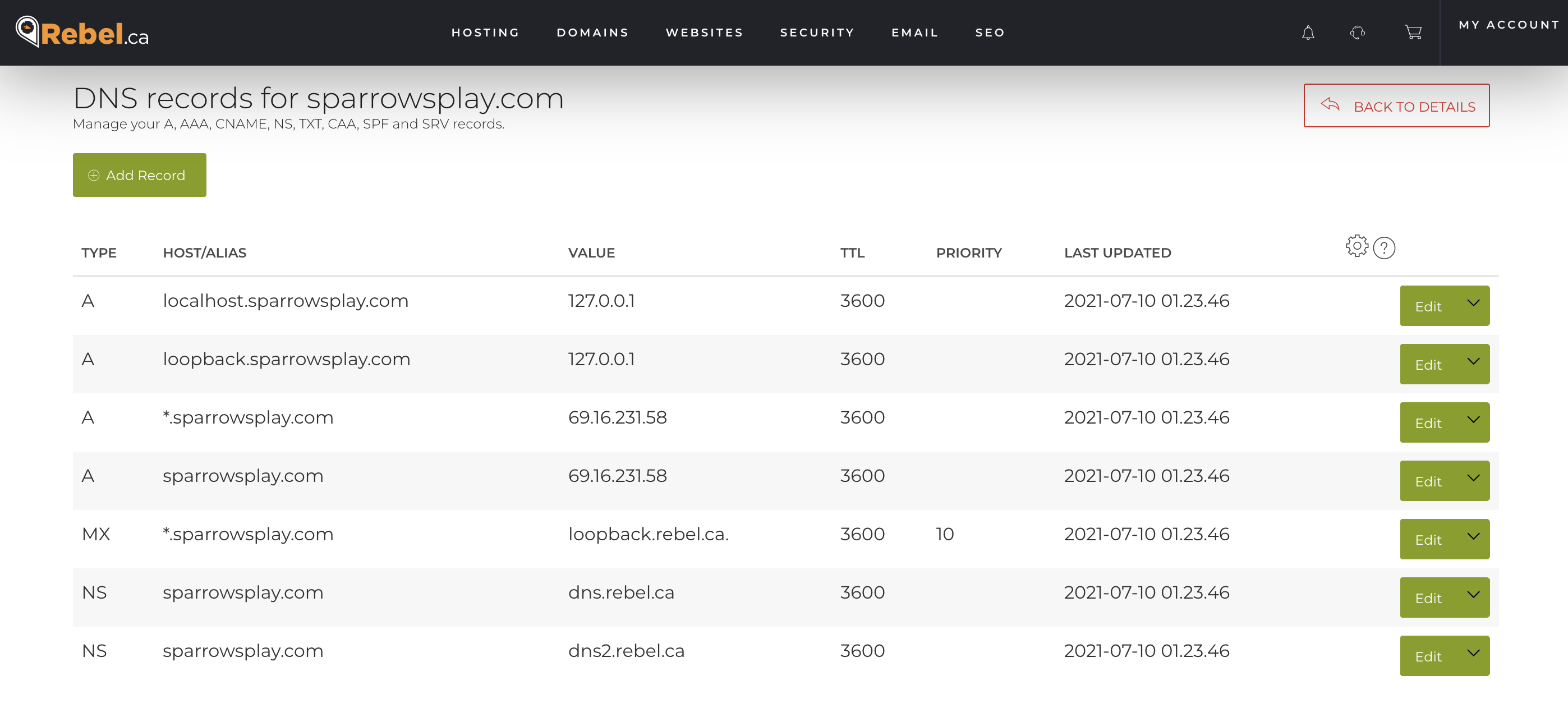

I did find it odd that I had to opt to “Park with Rebel.ca” in order to start managing my DNS. Once you’ve selected this option, you can then use the “Advanced DNS Manager” to see the records that they have for your domain.

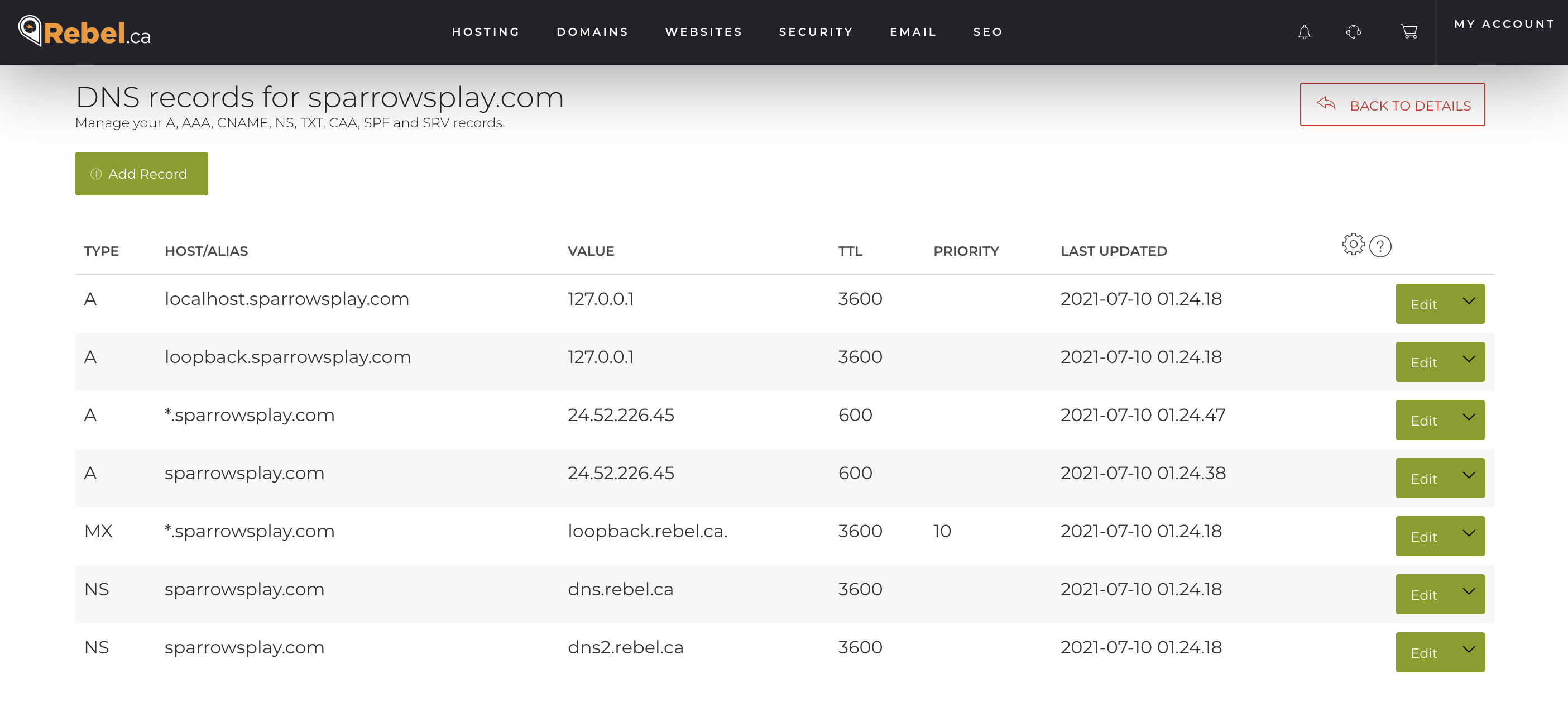

Here is what I got once I had parked my domain.

The IP address 69.16.231.58 reverse maps to lb04.parklogic.com, and parklogic.com appears to be a domain parking company. Visiting a parked domain appears to redirect to https://simcast.com/ eventually.

Since this particular domain only has a web server on it, I only need to make 2 changes to the default entry that was provided to me.

I could probably safely trim down some of the entries. I don’t need an MX record, nor the localhost or loopback entries. The wildcard could also be reduced to only the www subdomain. This works as is, and the extra entries shouldn’t cause any problems.

One thing that I’ve given up by moving to the rebel.ca DNS is control over the SOA record. Let’s look at the differences in the SOA records.

My old nameserver

|

1 2 3 4 5 6 7 8 |

sparrowsplay.com origin = ns.sbg.org mail addr = super.undernet.org serial = 2021063001 refresh = 600 retry = 7200 expire = 3600000 minimum = 600 |

Rebel.ca nameserver

|

1 2 3 4 5 6 7 8 |

sparrowsplay.com origin = dns.rebel.ca mail addr = noc.rebel.ca serial = 2021070801 refresh = 10800 retry = 3600 expire = 604800 minimum = 3600 |

This boils down to these changes (old vs. rebel.ca)

- Refresh: 10min vs. 3hrs

- Retry: 2hrs vs. 1hr

- Expire: 1000hr vs. 168hr

- Minimum: 10min vs. 1hr

I’m a little concerned with the refresh value being so much different. I was worried this would impact how long it will take to roll out changes to the DNS records in general. Previously with a 10min refresh – I’d see fairly quick DNS changes. This is somewhat mitigated by the fact that my site(s) are not high traffic, so many times the values are not cached at all. This might burn me when my IP address changes – but hopefully for only ~3hrs. I can probably live with that if it turns out to be that long.

The retry and expire values are basically in the same range, so it’ll be fine.

The minimum is actually the SOA default TTL and is used for negative caching. This means that all the queries that don’t have a valid response are cached for this amount of seconds. Thus the 1hr time is aligned with the general recommendations. The previous setting of 10mins I think was wrong and would cause extra network traffic in some cases.

While I do not have control over the SOA record, I can control the TTL for specific records as you will have noticed in the screen shots above where I’ve set some of them to 600.

|

1 2 3 4 5 6 7 8 9 10 |

% dig @dns.rebel.ca sparrowsplay.com ANY +noall +answer ; <<>> DiG 9.10.6 <<>> @dns.rebel.ca sparrowsplay.com ANY +noall +answer ; (2 servers found) ;; global options: +cmd sparrowsplay.com. 3600 IN NS dns.rebel.ca. sparrowsplay.com. 3600 IN NS dns2.rebel.ca. sparrowsplay.com. 600 IN A 24.52.226.45 sparrowsplay.com. 86400 IN SOA dns.rebel.ca. noc.rebel.ca. 2021070801 10800 3600 604800 3600 |

So for the sparrowsplay.com A record, I have 600 seconds (10min) set.

There is a lot of confusion about DNS records in general as is apparent in this stack overflow post. I know from experience with bind that if I forgot to update the SOA serial number, my changes wouldn’t flow. This I believe may be simply because the bind software was not deciding to change anything based on the serial number. As I read through things, the low TTL for the A records should cause my changes to flow quickly (10min vs. 3hrs).

Doing a quick test changing the record back to 69.16.231.58 and using one of the DNS propagation checking sites, I can see that some of the servers picked up the change immediately. The ‘big’ nameservers: opendns, google, quad9 seemed to pick up the changes very quickly. I did see google and opendns give back the old address randomly in the middle of my refreshing for updates, so clearly there is a load balancer with many nameservers and not all of them are picking up the change (yet).

At almost precisely the 10min mark, all of the nameservers appear to have gotten the new IP address. This is very good news, meaning that the TTL for the A record determines how long it will take to make an IP change for my website. This means that my earlier concern about the SOA record was mistaken, with TTL control over the A record I can quickly update my IP address.