A couple of years ago I picked up a conference give-away flash drive (4GB), which at the time seemed like a pretty nice freebie. The trouble was it only every liked to play nice with my Windows machine, Linux would refuse to mount it. The headline photo is the final product, I failed to take a before picture but the leather + snap case on this USB thumb drive was hideous anyway.

Turns out it was a fake, good thing it was free. Still in my typical fashion I didn’t want to just throw it away, heck I’m still carrying around the mysterious AMD 1GB key I got ages ago. So this bogus 4GB key sat in my work bag for a long while before I finally got to investigating it.

The very first thing I did was use the linux command lsusb, this helped me clue in that there was something wrong (fake) with the drive. I found a forum post that helped me get started down the right path. I got a copy of ChipGenius which told me the following:

Description: [I:]USB Mass Storage Device(Generic Flash Disk)

Device Type: Mass Storage Device

Protocal Version: USB 2.00

Current Speed: High Speed

Max Current: 100mA

USB Device ID: VID = 0011 PID = 7788

Serial Number: 874BE199

Device Vendor: Generic

Device Name: Mass Storage

Device Revision: 0103

Manufacturer: Generic

Product Model: Flash Disk

Product Revision: 8.00

Controller Vendor: Alcor Micro

Controller Part-Number: SC708(FC8708)/AU6987 - F/W EC23

Flash ID code: ADD5949A - Hynix H27UAG8T2BTR - 1CE/Single Channel [MLC-8K] -> Total Capacity = 2GB

So this felt like progress: it's 2GB and not 4GB as Windows seems to think. Still not bad for free. I then used my camera to get some close up shots of the naked circuit board to confirm the data that the ChipGenius tool dug out.

It was good to see that the values matched, this helped boost my confidence in the ChipGenius tool. I did find some references on the web claiming that sometimes ChipGenius is wrong, so it’s worth looking at the chips themselves.

Part of the output was also a link to a website, yet even with google translate the site left me guessing as to what I wanted to download – there were a lot of possible options. I choose one near the top “Series master, the Alcor MPtool AU6987T/6989 Yasukuni, production tools (2011.12.26.00)” as the title matches some of the data in the ChipGenius dump. In the end the stability of the site, language barrier and my inability to successfully download anything sent me off down other paths.

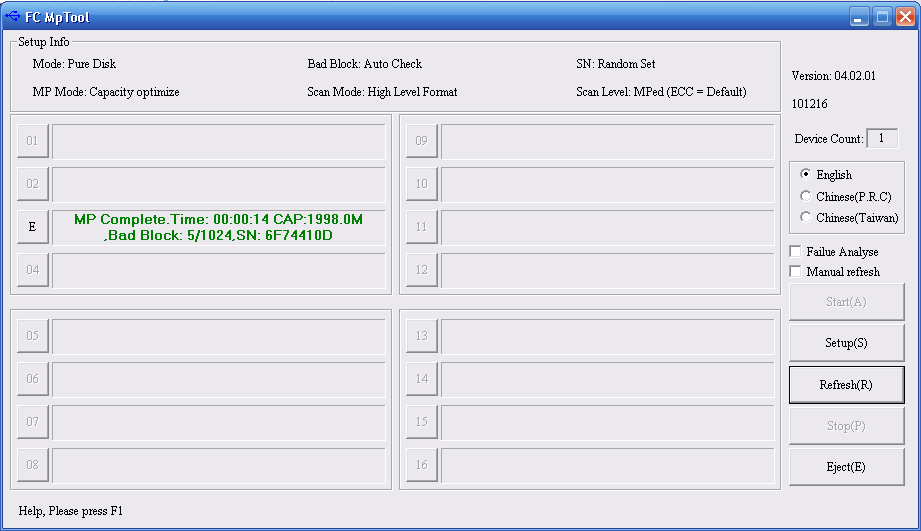

I then ended up searching on flashboot.ru with the controller chip number (FC8708) I was able to find and download (with a bit of google translate help) a tool that recognized the drive. The best way to find this tool is searching it’s name: FC_MpTool_FC8308_FC8508_FC8406_04.02.01.

The user interface was mysterious, but clicking on the drive letter started a reformat.. which resulted in a 2GB flash drive. This newly formatted drive was quite happy under Linux.

In terms of performance, I benchmarked copying 7 x ~300MB video files (total 1.9GB) to the stick, this reported ~4.7MB/sec. There were certainly bursty updates in file file progress dialog in Ubuntu. This isn’t great, but again it was free and it works under Linux. I also tried zeroing the entire drive ($ sudo dd if=/dev/zero of=/dev/sdc) which reported: 2095054848 bytes (2.1 GB) copied, 335.285 s, 6.2 MB/s – again, not great but good enough.

A bit of heat shrink tubing applied to cover up the bare circuit board and I’ve got a hack worthy USB key.